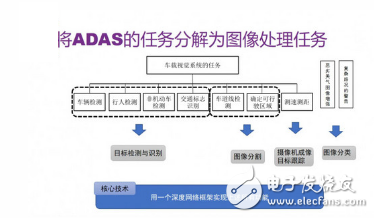

The ADAS system includes various tasks such as vehicle detection, pedestrian detection, traffic sign recognition, and lane line detection. At the same time, due to the requirements of application scenarios such as driverless driving, the vehicle vision system should also have the requirements of high speed, high precision, and many tasks. . For the traditional image detection and recognition framework, it is difficult to accomplish multiple types of image analysis tasks in a short time.

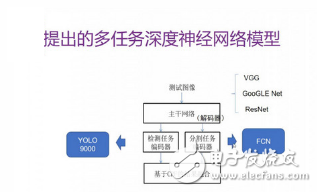

Associate Professor Yuan Xue's project team proposed a method of multitask processing in traffic scenarios using a deep neural network model. The analysis of traffic scenes mainly includes the following three aspects: large target detection (vehicles, pedestrians and non-motor vehicles), small target classification (traffic signs and traffic lights), and segmentation of the travelable area (roads and lane lines).

These three types of tasks can be completed by forward propagation of a deep neural network, which not only can improve the detection speed of the system, reduce the calculation parameters, but also improve the detection and segmentation accuracy by increasing the number of layers of the backbone network.

The following is a summary of the content shared the same day.

to sum up

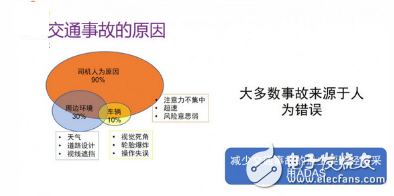

First, task analysis

According to a data from the WHO in 2009, there are 1.23 million people killed by traffic accidents every year worldwide. But we know that in the Korean War, the number of people killed in the entire war was almost one million. In other words, the number of people dying from traffic accidents every year is almost equal to the number of deaths in a very tragic war. According to WHO statistics, there are 1.23 million deaths caused by traffic accidents every year around the world; and 90% of traffic accidents are caused by drivers, such as lack of concentration, speeding, weak security awareness, etc. . So the current main way to reduce traffic accidents is to reduce the perceived error by using the Advanced Auxiliary Driving System (ADAS).

For the ADAS system, basically include these functions: night vision assist, lane keeping, driver reminder, anti-collision reminder, lane change assist, parking assist, collision resolution, dead angle obstacle detection, traffic sign recognition, lane line offset reminder, driver Condition monitoring, high beam assistant, etc. These features are required for ADAS.

In order to achieve these functions, the sensor generally needs to include a vision sensor, an ultrasonic sensor, a GPS & Map sensor, a Lidar sensor, a Radar sensor, and some other communication devices. But most of the sensors we see on the market are actually less functional, such as mobile I, which has only the functions of lane keeping, traffic sign recognition, vehicle monitoring and distance monitoring, but it is not comprehensive. From the manufacturer's or user's point of view, naturally we hope to use the cheapest sensor to complete more ADAS functions. The cheapest sensor is basically a visual sensor. So when we designed the solution, we thought, can we realize more functions of the ADAS system through the algorithm? This is the original intention of our entire research and development.

In addition, we also need to consider some of the features of ADAS. The ADAS system (including driverless) is performed on an embedded platform, which means that it has few computing resources. Then we must also consider how to ensure that the ADAS system can respond quickly and accurately on the basis of such a very small amount of computing resources, while also ensuring the need for multitasking. This is our second question to consider.

In order to solve the above two problems, we first decompose the task of ADAS. As shown in the figure, we decompose the tasks of ADAS into target detection and recognition, image segmentation, camera imaging target tracking, and image segmentation. Our research and development work over the past year is actually to use a deep learning framework to achieve the above four functions at the same time.

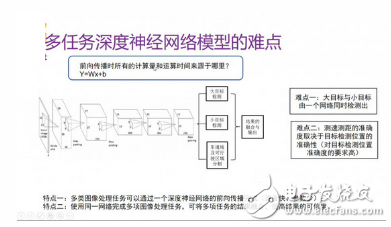

For a forward-propagating network, the amount of computation and computation time depends mainly on its number of parameters, and 80% of the parameters come from the full-link layer, so our first idea is to remove the full-link layer. Second, the deeper the network, the more parameters it will have. So if we make the target detection and recognition, image segmentation, camera imaging target tracking, and image segmentation into four networks, there will be X4 times the parameters.

Second, the model structure

This is a basic structure of the network we designed. It is divided into several parts: the backbone network (we call it the decoder), multiple branches (we call it the encoder), and CRF-based result fusion. Now we only design two encoders for this network, one is the detection task encoder, and the other is the split task encoder. Later we can add other encoders. The result is a fusion, mainly to use it to influence some weight selection of the backbone network. For the backbone network, we have chosen some popular algorithms, such as VGG 16, GoogleNet, ResNet, etc. Split task encoder We used FCN encoder, detection task encoder we used YOLO9000 encoder.

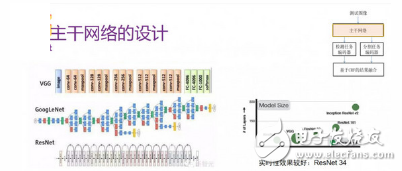

1, the backbone network

Let's take a closer look at the various parts of the network. First let's look at the backbone network. The backbone network we use VGG, GoogleNet or ResNet. These are optional. From the picture on the right (the vertical axis is the depth of the network, the size of the circle indicates the size of the model) we can see that ResNet is better in depth and size, we can choose ResNet to have better real-time performance.

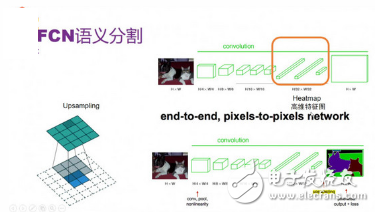

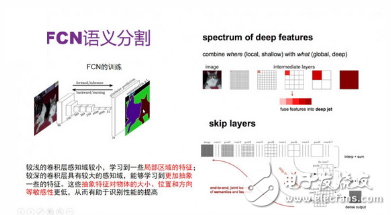

2, FCN semantic segmentation decoder

Then we look at the FCN semantic segmentation decoder. In a neural network, after a picture passes through the backbone network, a high-dimensional feature map is extracted. In fact, this process is to use the pooling method to reduce it. As a result, when the high-dimensional feature map is output, it is only 1/32 of the original image. Then we use upsampling to upgrade it to the original image size. The upsampling process is shown on the left, in this example we upsample the 2*2 image into a 4*4 image.

The result of the upsampling is predicted by the decoder. We compare it with the labeled image, calculate the loss, and then modify the weight. One problem in upsampling is that relatively small objects are not calculated. We know that some shallow convolutional layers have a smaller perceptual threshold and contain more local information. The deeper convolutional layer has a larger perceptual threshold, which can learn more abstract information. The FCN then upsamples the information of pool3, pool4, and pool5, so that multiple levels of information can be upsampled simultaneously.

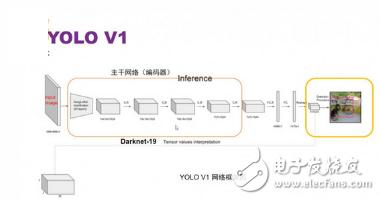

3, target detection / identification decoder YOLO

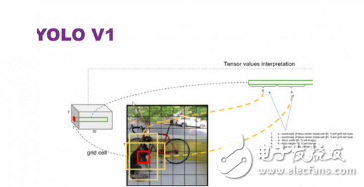

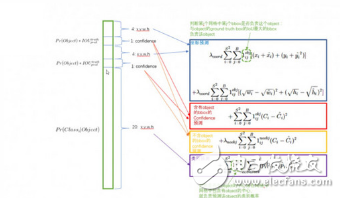

Next, let's introduce the decoder YOLO for target detection/recognition. We are using the YOLO V2 decoder, but here we introduce YOLO V1. This is the main framework of YOLO V1, its backbone network is Darknet19, we don't care about it. We focus on the process of the encoder. A feature map of the backbone network output. This feature map is normalized to a 7*7*30 feature map using a 1*1 convolution kernel. So what is this 30?

In such a 7*7 rectangular box, each square box is represented by a dot. Then we use a 5-dimensional representation of a rectangular box containing the square box, where 4 dimensions represent x, y, z, w, and the other dimension is confidence.

The first 10 of the 30 dimensions in YOLO V1 are two such rectangular frames. Their (x, y, z, w) represent the coordinate prediction, and the other dimension is the confidence prediction. The other 20 dimensions are category predictions (that is, there are 20 possible models in the model such as cars, pedestrians, etc.).

So for these two considerations, we use a backbone network to do the previous operations, and then add multiple small branches to the backbone network according to the specific tasks. In this way, the task of multiple image processing can be completed by the forward propagation of a backbone network, and the parameters are greatly reduced, and the calculation speed is also faster. At the same time, we can also meet the needs of multiple tasks at the same time. In addition, in the end we can also combine multiple results and drive to the adjustment of the training process, which can improve the credibility of our results.

But in this process we also encountered some difficulties. The first difficulty is that we need to detect large targets (such as vehicles) and smaller targets (such as traffic signs) in the same network. The second difficulty is that the position of the target we need for speed measurement is very accurate. We have not solved this problem yet.

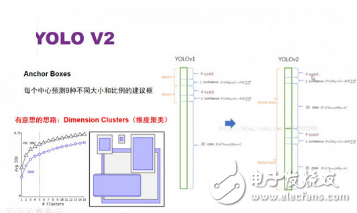

The biggest difference between YOLO V2 and V1 is the use of Anchor boxes. The so-called Anchor boxes are suggested boxes of different sizes and proportions for each central prediction (for example, 9 types). Each suggestion box corresponds to a 4-dimensional coordinate prediction, a 1-dimensional confidence prediction, and a 20-dimensional category prediction. It proposes a very interesting idea is dimension clustering, that is, the size of the Anchor boxes is calculated by clustering in the training set. This way, for example, it selects 5 boxes from 9 boxes. Thus for the VOC data set, a total of 5*(4+1+20)=125 output dimensions.

The choice of YOLO V2 Anchor boxes and the idea of ​​dimensional clustering are more effective for our car camera problems, because the position of our cameras is relatively fixed, so we can calculate the size of each target is relatively fixed.

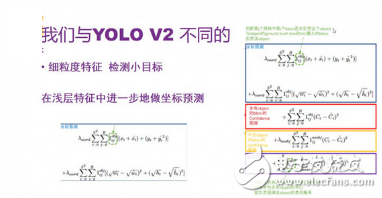

We have also made some changes based on YOLO V2. The first is that we did some fine-grained features to detect small targets. Secondly, we further make coordinate predictions in the shallow features and then add them to our entire forecast, which can improve the prediction of small targets.

4, some thinking

In the course of this research, we did some thinking.

First of all, in the field of computer vision, low-middle-level visual problems pay more attention to the original visual signals, and the connection with semantic information is relatively loose, and it is also a pre-processing step for many high-level visual problems. There are many papers on the CVPR related to low-level visual problems, including deblurring, super-resolution, object segmentation, and color constancy.

Secondly, the abstract features in the last layer are very helpful for classification, which can well determine what kind of objects are contained in an image, but because the details of some objects are lost, the specificity of the objects cannot be well given. Outline, indicating which object each pixel belongs to.

How do we combine shallow features with deep features? This actually requires further research.

Third, the database is established

In this aspect of the database, we find that the road conditions in China are very different from those in foreign countries, and the types of vehicles in China are also diverse. So we developed a semi-automatic annotation software, which means that we can automate the vehicle annotation by algorithm, and we can also manually correct the annotation with large error. At present, we have marked 50,000 rectangular annotation data sets. We strive to open data sets by the end of the year, and we can also help companies build databases.

In addition, in the database establishment, we have to expand the database type. For example, through the original daytime pictures, we can generate a picture of the night and add it to our training samples.

Fourth, the results show

The Bluetooth headset is to apply Bluetooth technology to the hands-free headset, so that users can avoid the annoying wires and easily talk in various ways. Since the advent of the Bluetooth headset, it has been a good tool for mobile business people to improve efficiency.The electromagnetic wave of the Bluetooth headset is much lower than that of the mobile phone. When you talk on the phone, you only need to put the mobile phone in your briefcase or in your pocket, and put on the Mini Bluetooth Headset to talk easily. It is not necessary to raise your hand high, and it can effectively reduce the influence of electromagnetic waves on the human body.

Bluetooth Headphone,Customize Headphone,Call Center Headset,Mini Bluetooth Headset

Shenzhen Linx Technology Co., Ltd. , https://www.linxheadphone.com